With LLMs, Enterprise Data is Different

Enterprise projects pose unique challenges and must be approached differently

Welcome to the age of Enterprise LLM Pilot Projects! A year after the launch of ChatGPT, enterprises are cautiously but enthusiastically progressing through their initial Large Language Model (LLM) projects, with the goal of demonstrating value and lighting the way across for mass LLM adoption, use case proliferation, and business impact.

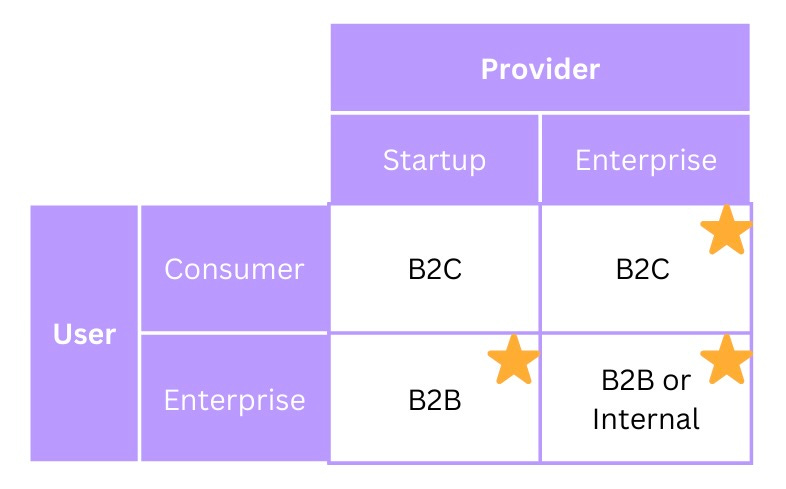

Developing these solutions either within or for mature businesses is fundamentally different than startups developing solutions for consumers. Yet the vast majority of advice on LLM projects comes from a startup-to-consumer perspective, intentionally or not. The enterprise environment poses unique challenges and risks, and following startup-to-consumer guidance without regard for the differences could delay your or even halt your project. But first, what does “enterprise” mean?

There’s currently a strong but waning bias of LLM content toward the consumer-user and startup-provider portion of the provider-user matrix above. It’s largely driven by the speed to market and click-hungriness of VC-funded startups and independent creators.

Where then does the enterprise guidance that does exist come from, and can you trust it? This may surprise you, but very few enterprises have actually completed LLM projects. At this point, few entities like traditional consulting orgs are trading on actual experience, but instead repackaged web content. There’s not been sufficient time for much enterprise experience to accumulate, let alone disseminate. There are plenty of exceptions, but the majority of future LLM experts from within enterprise are still busy with their first projects, and the numbers of experienced startup providers for enterprise is still limited.

As a B2B software vendor I’ve had the privilege of developing and implementing LLM projects (the Startup/Enterprise cell in the provider/user matrix above) for enterprises since 2021, and have overcome the set of unique challenges to complete multiple end-to-end implementations. In this article, I’ll share some of the most important dimensions I’ve observed along which the data involved in enterprise LLM projects differs from startup/consumer projects, and what it means for your project. Use this to de-bias information coming from a startup/consumer perspective, inform yourself of risks, and build an intuition around enterprise applications of LLMs.

Data is Different

Specifically, this post will focus on the data used for data-backed applications, which cover a majority of enterprise LLM use cases. Let’s move beyond the LLM parlor tricks of cleverly summarizing an input or answering a question from its memory. The value that can be generated by allowing LLMs to operate over data is much greater than the value from interacting with standalone LLMs. To prove this, imagine an application that consists solely of LLM operations, taking user input and transforming it to produce an output. Now imagine that this same system can also access data stored outside the model: the capability is additive, and so is the value. This data could be anything: emails, client records, social graphs, code, documentation, call transcripts…

Almost always, the data comes into play through information retrieval - the application will look up relevant information, and then interpret it using an LLM. This pattern is commonly known as RAG (Retrieval-Augmented Generation). So how does data differ between enterprise and startup/consumer applications, and what does that mean for your project?

Open 🌍 vs Closed🚪 Domain

Open-domain data refers to a wide array of topics that aren't confined to any specific field or industry. This is the kind of data that consumer applications or public-facing enterprise offerings deal with. On the other hand, closed-domain data is typically found in enterprise applications. This type of data is more specialized and domain-specific, and often includes terminology, acronyms, concepts, and relationships that are not present in open-domain data.

Real-world examples:

Enterprise Data

Pharmaceutical R&D documentation 🚪

E-commerce product listings 🌍/🚪

Customer support records 🌍/🚪

Company policies 🚪

Quarterly earnings reports 🌍

Consumer Data

Social media posts 🌍

Personal finance records 🌍

Podcast transcripts 🌍/🚪

Recipes 🌍

Travel journals 🌍

Implications for your project

Failing to account for closed-domain data in your application could render it totally ineffective. Most commercial LLMs are trained on open-domain data and, without help, will simply fail to correctly interpret terms and topics they haven’t encountered in their training. Likewise for the embedding models used in vector search. For example, many organizations have massive vocabularies of acronyms that are used as the primary handle for certain concepts, and without help an LLM or retrieval system may be unable to relate the acronyms to the concepts they represent.

However, don’t be fooled into undertaking costly retraining projects if it’s not actually needed or there are simpler solutions! Many domains that seem closed are in fact open. For example, commercial LLMs have been trained on an immense amount of public financial data, and intuitively understand it because, while finance is a particular domain, it is not a closed domain.

💡 Particular Domain ≠ Closed DomainEven fields like medicine and law are heavily represented in public data, although subdomains often exhibit closed-domain properties (same for finance). Think twice before pursuing training projects or specialized models and evaluate whether they are truly needed. Expect more posts on how to assess the openness of your domain’s data.

In most cases of closed-domain data, you can get quite far by looking up synonyms or definitions of closed-domain terms and injecting them into your retrieval and generation systems. However, this requires you to have structured information around synonyms, relationships, or definitions, which may not be readily available. When possible, this pattern is often the best and simplest solution and we’ll explore related techniques in future posts.

💡 Prefer bespoke systems to bespoke modelsIn summary:

Startup/consumer applications’ bias toward open-domain data makes them a natural fit for off-the-shelf commercial LLMs and embedding models for vector search

Enterprise applications, when they involve closed-domain data, often necessitate different, more complex designs to provide acceptable performance

Start by assessing whether your domain is open or closed, and if it’s closed identify whether structured information about the domain exists (synonyms, definitions, acronyms, etc)

Size

Businesses tend to have more data than individual consumers. The simplest proof of this is that an enterprise is a collection of employees, clients/consumers, and processes, all of which generate data, while a consumer is a population of one. Businesses also tend to undertake projects over longer spans of time than consumers, with a higher density of records within those spans. We’ll split the size dimension into 3:

Population 👪 vs Unit 🧑

Archival 💾 vs Fresh 🍎

Many modalities 🌈 vs Fewer modalities 🏁

Enterprise projects tend to occupy the “more data” side of each scale. The reason this matters is because, as the amount of data you retrieve from grows, so does the likelihood that unhelpful records will be considered relevant, simply because there are more unhelpful records, or “noise.”

💡 More data causes less accurate retrievalWhat’s more, certain types of data generate more noise than simple random data. Those are sometimes referred to as distractors, and we’ll see how they can occur in some of the subcategories.

Population 👪 vs Unit 🧑

Each part of an enterprise tends to deal with groups of things: employees, clients, products, projects. Each unit in these groups can generate anywhere from a few to millions of records that your application may need to handle. Individual consumers may be one of those units, and therefore represent a smaller slice of data. To understand your data along this dimension, ask: does it deal with a single entity or with many? Are those entities known, or even knowable?

Real-world examples:

Enterprise Data

E-commerce product listings 👪

Customer support records 👪

Customer support records for a particular customer 🧑

A company’s meeting transcripts 👪

A team’s meeting transcripts 🧑

A company’s github repositories 👪

Startup/Consumer Data

My personal call transcripts 🧑

My github repositories 🧑

Implications for your project

There’s a point that most enterprises’ datasets are well beyond, where it’s critical to limit the size of data your application operates over. Population-level data can easily push you past this limit. Even if your application contains an entire population’s data, you may be able to transform it into unit-level data at runtime by filtering on a target entity (client, product, project, etc), clearing the path for a simpler and more accurate retrieval system. However, in some projects this can be impossible if your client wishes to provide an uncurated data dump of, for example, PC hard drives. There are several approaches to handling this:

When scoping the project, focus on a problem where the supporting data is well structured and able to be filtered down to a unit level.

Request that your client provides a smaller or segmented dataset.

If the above approaches are unsuccessful, identify entities by which the data can be filtered in coordination with subject matter experts. This may dictate new data extraction/enrichment and query understanding processes, but for large datasets it may be critical.

If you aren’t successful with any of these, expect to spend a lot of effort reducing noise in your retrieval system. For both startup/consumer and enterprise applications, you should always seek to relieve the burden on the retrieval system by only searching through the relevant slice of data. This isn’t optional with massive enterprise datasets. Data slicing approaches will be addressed more in future posts.

Archival 💾 vs Fresh 🍎

Enterprises love bookkeeping, at times because they are forced to keep records for compliance reasons, other times because they’ve simply been doing the same work for many years and have accumulated a lot of documentation. Clearly, this contributes to the data size issue, in that it can introduce noise, but it can also adds a more aggressive form of noise: highly semantically similar records which, if unaccounted for, will become adversarial distractors to both your retrieval and generation systems. A good heuristic for whether this will be a problem is: if data pertains to projects spanning long periods of time. All the enterprise examples below can be thought of as part of long-running projects (product development for a product, project management for a project, customer support for a customer) while the consumer examples are related to relatively short-lived events or objects. There are plenty of exceptions, but this is going to be a challenge more frequently in enterprise.

Real-world examples:

Enterprise Data

Quarterly voice of customer reports 💾

Project report draft versions 💾

Product spec versions 💾

Quarterly OKR meeting transcripts 💾

Startup/Consumer Data

Recipes 🍎

Travel journals 🍎

Social media posts 🍎

Implications for your project

If your project data has a lot of versions, entries, or copies, you should plan to address it from the beginning. It can greatly increase retrieval noise, and can lead to contradictory or untrue inputs to your generation system. The best courses of action are generally:

Request that the data be curated and only the fresh data is provided for your application (not possible if previous versions of the records are also relevant).

If the first approach is unsuccessful, consider a data slicing approach similar to the one for addressing population-level data. This can help get to more relevant retrieval results before generation.

Instruct your generation system how to handle different versions, dates, and contradictory records through prompting, few-shot examples, or fine-tuning. Note that this will require extracting version or date information and passing it through your system to the generator.

Date-based ranking: it’s best to avoid this, as your system probably already has a ranking system and blending ranking signals is harder than it sounds. However, if you can replace or follow your original ranking with a relevant/not-relevant filter, then a date ranking may be useful.

It’s best to avoid this challenge altogether by insisting on high quality data rather than building complex passive version control or multi-signal reranking systems.

Few 🏁 vs Many 🌈 Modalities

How many discrete forms of data does your application need to support? Data generated directly by individuals usually falls into our natural language modalities: text, audio, image, video. Within those, we can look even deeper: text records may contain emails, code, documents, presentations, websites… which might contain other forms of media themselves! Then there’s data about individuals and their behavior, and about other entities (like products or events), which are often represented in structured formats like tables and graphs. The average enterprise will own a mixture of all of these, wheras a consumer often only consciously stores and process a few. Think of the difference between a company’s data warehouse and your personal iCloud. In some enterprise areas (like federated search) you will need to deal with several modalities at once, while in others you will focus on one at a time.

Real-world examples:

Enterprise Data 🌈

Project kickoff reports

Click behavior data

CRM data

Product spec sheets

Quarterly OKR meeting transcripts

Property brochures

Startup/Consumer Data 🏁

My Notion pages

To-do lists

Phone calls

Social media posts

Implications for your project

Another way of looking at this is that enterprises have myriad tiny categories of media, each containing many entries, whereas individual consumers tend to have fewer overall categories but (as we’ll see in the next section) greater variety within those categories. This property of enterprises actually turns out to be an advantage: the different types of content can help you scope your retrieval using the slicing technique described in the data size section, and even more importantly, scope your solution as narrowly as possible while still solving a user problem. As a general rule, only operate over the types of content that are necessary to address your use case. As much as possible, treat use cases that involve different modalities as different projects and solve them individually, before combining for a one-size-fits-all solution.

Data Regularity (🎲 vs 🧾)

Though enterprises may have more different forms of content, all the examples of a certain kind are likely to be fairly similar. In structured data like graphs and tables this is self-evident (because they were generated by a consistent business process), but why might it be the case with human-generated data like reports, presentations, and phone calls? The simplest reason is because enterprises and even entire industries have fairly reliable norms and procedures around communication, but those between consumers are more diverse. For example, sales phone calls tend to follow more reliable structures than phone call between friends.

Real-world examples:

Enterprise Data 🏁

Project kickoff reports

Click behavior data

CRM data

Product spec sheets

Quarterly OKR meeting transcripts

Real estate brochures

Startup/Consumer Data 🌈

Personal email

Phone calls

Social media posts

Implications for your project

Depending where your project falls on the consumer-enterprise spectrum, you may be able to make more assumptions about what the data looks like. If you’re building a product for internal use or to work with regulated documents, you have the highest certainty about data structure that your system will need to handle. On the other hand, if you’re building a solution used by disparate enterprise customers, you may need to either build your solution flexibly enough to handle variable data schemas or perform discovery and system adaptation to each customer’s data. The parts of an LLM application in which differences may arise include pretty much everything: data ingestion, retrieval, and generation. For example:

Unfamiliar data not represented in few-shot prompt examples increases defect rate

Unexpected formats in documents can introduce retrieval defects due to chunking

The more you know about the data, the simpler and more reliable you can build a system to work with it. So use this knowledge when it’s available and design flexibly when it’s not.

Access Control (📁 vs 🗂)

Possibly the most mundane of the differences between the data in consumer and enterprise applications is who is allowed to access it. In consumer applications, this is typically straightforward: a user can access their data, others can’t (except maybe an admin). However, in enterprise this situation can become extremely complex, with numerous and frequently updating access groups for particular resources, and different tiers of access. The stakes are often high, with employees needing access to certain data to perform their jobs but also needing access immediately revoked if they leave that role.

Real-world examples:

Enterprise Data

Product line documentation 🗂

HR records 🗂

Consumer Data

Crypto brokerage transactions 📁

Frequent flyer account 📁

Implications for your project

Unfortunately there’s no way to avoid a lot of engineering to implement access control properly. The best thing you can do for your project is to recognize this early and make sure you have the engineering expertise and client access necessary to figure it out. Definitely look to integrate with the data source’s identity provider if applicable, and look out for frameworks to help with the integration. Still, there are many decisions to be made about how your system handles different user groups, for example: in a system involving retrieval, will results be filtered before or after being retrieved (sometimes called early- vs late-binding)?

Conclusion

I hope this post will raise some flags at the beginning of your enterprise LLM project that save you time later on and help you form a de-biased intuition around LLM projects.

Hi, This is Regan. I am currently operating a Chinese AI blog named Baihai IDP.

Please allow me to translate this blog post into Chinese.

I am very interested in the content of your blog post. I believe that the information in it would be of great benefit to a wider audience if it were translated into Chinese.

I would be sure to include a link to the original blog post and your name as the author. I would also be happy to provide you with a copy of the translated post for your records.

I hope you will consider my request and I look forward to hearing from you.

It's truly wonderful. Thank you for sharing. Even though it's been over a year since you posted this, I still gain a lot from reading it now.